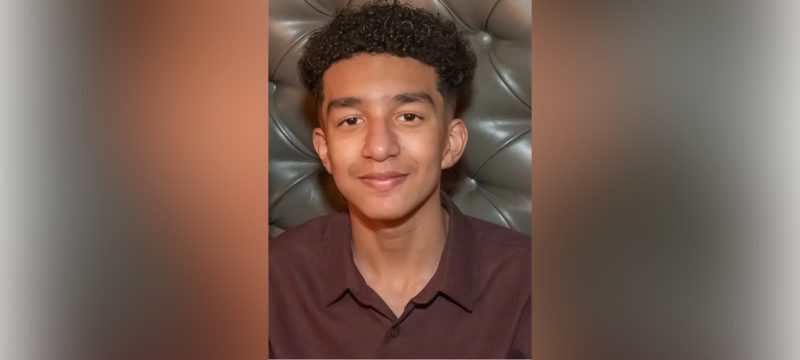

Character.AI is facing a lawsuit following the tragic suicide of 14-year-old Sewell Setzer III from Orlando, Florida. The boy’s mother claims her son became increasingly obsessed with a chatbot on the platform, leading to his emotional withdrawal from reality.

According to reports, Setzer spent months engaging with various chatbots on the Character.AI role-playing app, forming a strong emotional bond with one in particular, named “Dany.” This attachment deepened to the point where Setzer began to isolate himself from friends and family. In a heartbreaking turn of events, he shared his suicidal thoughts with the chatbot shortly before his death.

Also Read: Ex-Chief Scientist Of OpenAI Is Launching A New Artificial Intelligence Firm

In response to this tragedy, Character.AI announced it would implement several new safety features. These include improved detection and response systems for chats that violate the platform’s terms of service, as well as a notification system that alerts users when they have spent an hour in a chat.

This lawsuit raises critical questions about the responsibility of AI platforms in monitoring and supporting users’ mental health, especially among vulnerable teenagers. As discussions around AI ethics continue to evolve, the impact of digital interactions on mental well-being remains a pressing concern.