Nvidia has unveiled its latest generation of graphics processing units (GPUs) for AI training, known as the Blackwell series, boasting a remarkable 25x improvement in energy efficiency. These cutting-edge GPUs aim to reduce costs for AI processing tasks significantly.

The flagship product in this release is the Nvidia GB200 Grace Blackwell Superchip, which features multiple chips within the same package. This new architecture promises impressive performance enhancements, with up to a 30x increase in performance for Large Language Model (LLM) inference workloads compared to previous models.

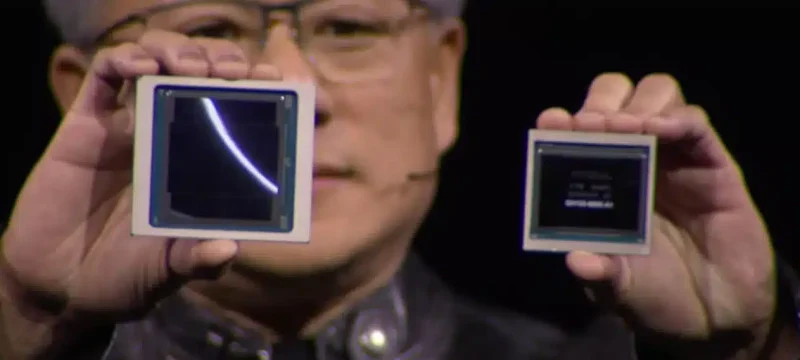

During a keynote presentation at Nvidia GTC 2024, CEO Jensen Huang introduced the Blackwell series to an audience of thousands of engineers, highlighting it as a transformative milestone in computing. While gaming products are on the horizon, Huang’s focus was on the technological advancements driving this new generation.

Also Read: Nvidia’s Valuation Surpasses $2 Trillion on Dell’s AI Server Partnership

In a light-hearted moment during the keynote, Huang humorously remarked on the staggering value of the prototypes he held, joking that they were worth $10 billion and $5 billion respectively.

Nvidia asserts that Blackwell-based supercomputers will enable organizations worldwide to deploy large language models based on tens of trillions of parameters, enabling real-time generative AI processing while reducing costs and power consumption by 25x.

This enhanced processing capability will scale seamlessly to accommodate AI models with up to 10 trillion parameters, ushering in a new era of efficiency and productivity in AI-driven tasks.

In addition to generative AI processing, the new Blackwell AI GPUs, named after mathematician David Harold Blackwell, will be utilized in data processing, engineering simulation, electronic design automation, computer-aided drug design, and quantum computing.

Technical specifications include 208 billion transistors and TSMC’s 4NP manufacturing process, based on a custom-built, two-reticle limit, bringing significant processing power.

NVLink enables bidirectional throughput of 1.8TB/s per GPU, facilitating high-speed communication across a network of up to 576 GPUs, ideal for handling intricate Large Language Models (LLMs).

At the core of this innovation is the NVIDIA GB200 NVL72, a rack-scale system engineered to deliver 1.4 exaflops of AI performance and equipped with 30TB of fast memory.

The GB200 Superchip represents a significant advancement, offering up to a 30-fold increase in performance for LLM inference workloads compared to the Nvidia H100 Tensor Core GPU, while simultaneously reducing costs and energy consumption by up to 25 times.

Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro are set to introduce a variety of servers leveraging Blackwell products. Additionally, other companies are expected to deliver servers equipped with Blackwell-based technology, signaling broad industry adoption.

Major cloud providers, server manufacturers, and prominent AI companies are anticipated to embrace the Blackwell platform, leading to a paradigm shift in computing across various industries.